Yet another definition of Intelligence

After my recent article about the legal implications of artificial neural networks, I’ve got the privilege to share and compare my point of view with a few experts in the field, during my talk at the seminar organized by the “Università degli Studi Milano Bicocca”, in collaboration with “The Innovation Group”.

Of this unexpected and great privilege I’ve to thank in particular Carlo Batini and Roberto Masiero, that let me know and talk to many smart Professors.

Most of my talk was an application of common sense: behind the hype, the marketing and the cultural (and economical) pressure of lobbies, there is just software, deterministic like any other. Full of bugs, like any other.

However the title of the seminar “Myths and reality of Artificial Intelligence — Theoretical issues and practical developments” and the high preparation of the audience were a call for a more technical contribution than my first article on the topic.

Thus I dared to propose yet another definition of intelligence.

Such definition was useful to understand how far we are from an artificial general intelligence, but also to foresee which characteristics it will have.

Unfortunately two slides were not enough to explain it: talking with the audience after the seminar I realized that it deserves a more in-depth analysis, with a review of its advantages and its disadvantages.

Thus here I try to expand the definition of intelligence in the slides:

type Knowledge = ([Set], [Function])

type Intelligence = (Perception, Knowledge) -> ([Action], Knowledge)

comprehension :: (Perception, Knowledge) -> Knowledge

imagination :: Knowledge -> [Prediction]

will :: [Prediction] -> [Decision]

execution :: ([Decision], Knowledge) ->[Action]

abstraction :: (Perception, Knowledge) -> Knowledge

-- Human Intelligence

-- NOTE: first we react to perceptions, then we learn

human :: Intelligence

human (perception, old_knowledge) = (actions, new_knowledge)

where new_knowledge = abstraction (perception, old_knowledge)

useful_knowledge = comprehension (perception, old_knowledge)

predictions = imagination useful_knowledge

decisions = will predictions

actions = execution (decisions, old_knowledge)

-- _Hypothetical_ Artificial General Intelligence

-- NOTE: it can learn first and then use the new knowledge to react

machine :: Intelligence

machine (perception, initial_knowledge) = (actions, new_knowledge)

where new_knowledge = abstraction (perception, initial_knowledge)

useful_knowledge = comprehension (perception, new_knowledge)

predictions = imagination useful_knowledge

decisions = will predictions

actions = execution (decisions, new_knowledge)

A function, but not a black box

In this definition, intelligence composes (at least) five functions:

- comprehension that filter the knowledge relevant to the perception

- imagination that use the relevant knowledge to make predictions

- will that use predictions to decide what to do

- execution that turn decisions to actions

- abstraction that derives new knowledge from perceptions and the old one

We need to dwell inside the inner working of intelligence because we cannot assume an equality relation in the codomain of the function: as everybody knows, the exact same action performed in different places or at different times assumes a completely different meaning and produces different effects.

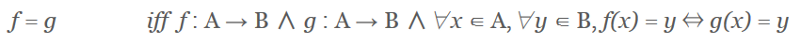

Indeed, if we do not assume an equality relation in the codomain, we cannot state that two functions are equal if they produce the same result given the same argument: two functions are equal if and only if they follow the same rule!

In other words:

This simple math argument constitutes an objection to current uses of ANNs: you cannot say that a Deep ANN approximates intelligence if you cannot explain its working and debug it.

It could be approximating madness as well! :-)

Knowledge… what?

We

know we cannot

trust any AI that works like a black box.

It’s too easy to hide bias or prejudices behind an inscrutable

black box.

Thus I define intelligence as a function that produces actions and knowledge given existing knowledge and a new perception. This definition can be mapped to the definition of intelligent agent by Legg and Hutter, but with an explicit accumulation of knowledge.

Thus intelligence can be thought as a stateful function that accumulate Knowledge.

We do not need to define Knowledge, we “just” need it to be something that can be inspected, aggregated, filtered, projected in the future and used to turn decisions to actions.

However

to ease our reasoning I have included a definition of knowledge as

the list of

Sets that we know about and the list of Functions

that we know about.

Some sets are empty (like the set of “intelligent machines till

now” or the set of “unicorns”), others are singleton (like the set

of “my mom”), others are countable and finite (the set of “my

friends”) and so on.

Some functions are simple and generic (like counting things) others

are pretty complex, like the aggregation of actions performed to

turn a child into a man (also known as “education”).

(Yeah, there is a reason if I used Haskell to describe a model of intelligence! You know… a kid with a hammer…)

Such definition is both general and powerful: the definition of intelligence can be part of our knowledge (it is a function, after all) just like the definition of knowledge itself (a couple of sets) and we can use our knowledge to make predictions through the application of functions to sets.

However I happily admit that this definition of knowledge is “naive” in the sense that it is based on an informal intuition of set theory. Actually, intelligence keep working pretty well even when it faces paradoxes, mainly because it can comprehend, it can understand the context and filter the relevant knowledge.

I’m not

competent enough to choose an axiomatic set theory useful in this context, in

particular because some of the sets we are considering have

no equality

relation among elements.

I guess this affect the axiom of choice, for example, but I can’t really say

how.

Still, note that any definition of Knowledge would do, as long as it can be inspected, aggregated, filtered, projected in the future and used to turn decisions to actions.

Advantages

The

most important advantage of this definition is its transparency.

Each component can be studied, tested and debugged in

isolation.

A general artificial intelligence working as a black box would be useless.

Not because of its technology: we couldn’t trust the humans that developed it.

Works well with humans

This definition of intelligence maps well to the different clusters that have been analyzed by Sternberg in his work on the triadic theory of intelligence.

In particular each of the components that the Sternberg theory analyze can be mapped to one or more of the functions that intelligence composes.

For example, the analytical giftedness could be explained as an effective development of comprehension and imagination, the creative giftedness as an effective development of abstraction and imagination and the practical giftedness as an effective development of comprehension, will and execution.

An undeniable proof

The presence of abstraction in intelligence provides a non-deferrable, non-deniable test to prove the general intelligence of a machine.

To prove to be general an artificial intelligence should be able to discover and explain us new abstractions (including functions) that we were not able to think of, just like an intelligent mathematician.

When this will happen, the humanity will not be able to rise the bar further.

When this will happen, we will be facing the first non-human intelligence.

Also, this proof subsumes the Turing test and address the issues posed by the Chinese room: the machine would explain us its own discoveries and, even if the hypothetical human running the algorithm inside the machine would not be able to do such discoveries, the machine as a whole would.

A truly unsupervised learning

The presence of comprehension in intelligence means that, once created, an intelligent machine would not require any human supervision to learn.

This is pretty different from what we sell as “unsupervised learning” right now, because the selection of the calibration dataset and the choice of the features to consider are still delegated to humans (and many other parameters of the algorithm, like the “learning rate”).

An intelligence, instead, selects relevant informations out of raw perceptions.

A little (but cumulative) edge over humans

This

definition of intelligence also shows that any artificial general

intelligence would have a little, but cumulative, edge over

humans.

Or, to be fair, humans would have a little cumulative handicap

against it.

Humans

indeed first

react to perceptions and later learn from

them.

This means that our reaction does not take into account new

perceptions while reacting. This gift of evolution is optimal in a

dangerous environment, but is suboptimal when there are no threats

to our life and reproduction.

A

intelligent machine could easily fix this.

An artificial

general intelligence could learn first and then react.

Disadvantages

The first limit of this definition is that it’s not rooted in a deep biological understanding of the brain. Also, while inspired by a limited understanding of CBT, it is not rooted in psychology.

I strongly believe in a multidisciplinary approach to technology, and this requirement is particularly important in AI research.

We need a multidisciplinary approach but this is not what you find here.

Anthropomorphism

An interesting objection is that this definition of intelligence is pretty anthropomorphic, which is something I myself blame in the AI parlance.

This is true.

To my eye however, this specific problem is inherent to intelligence.

We have too few non-human intelligence to talk with. Zero, to be precise.

No

current AI technology is actually intelligent.

And, in my opinion, when we talk about animals’ intelligence, we

are just projecting our own experience over them to explain their

behavior.

Yet no Accountability

Another problem of this definition of intelligence is that it does not allow machines to be accountable for their action, even if intelligent.

Indeed

the will

function does not require, by itself, freedom.

A deterministic optimizer could be a pretty good

implementation.

But without free will, you can not be responsible of your action.

That’s

what I mean when I say that AI cannot decide over human.

Computers compute,

they do not decide.

This open to all sort of funny issues.

Think,

for example, of an AI driving a car. Who accounts for deaths it

causes?

Let assume we put in jail programmers, since we don’t want to bore

stockholders: but which programmers? What if they used an open

source library? What if the library is bugged? And what if the bug

is discovered years later? Or what if the bug was fixed, but the

software was not updated?

And what about the rest of the car? What about sensors? What about

wheels?

Nothing about efficiency

One of the well known limits of current statistical tools that are sold under the AI umbrella is that they need tons of data to learn. Possibly personal data.

Humans do not need so many informations to learn.

And indeed, there is a lot of less hyped research that try to address this issue.

This definition however does not take efficiency into account: any of the functions that compose intelligence might be approximated statistically as long as such implementation is reproducible and you can prove it’s actually approximating the right function.

In practice, however, data efficiency will be an issue.

Nothing about phenomenology

A more mild approach to AI marketing is to talk about “intelligence augmentation”: instead of bad AIs stealing our jobs, it proposes AI as a tool that can help us in our daily activities.

Actually, I think this is the best use we can do of these statistical tools.

However, before their mass distribution, we should spread the knowledge of their internal working, to avoid the issues that I describe as “business-aided ignorance” in the slides. So far the dangers come from people, not technology.

AI

can work

as a telescope.

But as a telescope, while it shows us

something that we could not see with our eyes,

it hides something

else. It’s important to get an insight about this.

On the other hand, there is no reason to think that an artificial super intelligence would be a threat to us. At least, not a threat like Terminator.

Without pain and without a need to reproduce itself, it could be pretty benevolent to its own creators. It would probably serve us as well as possible.

The real risk is evolutive.

Thinking is an expensive activity. It consumes more than 20% of our energy.

Indeed we are pretty used to delegate thinking to others: intellectuals, leaders, entrepreneurs, politicians… even programmers… all serve our need to delegate this expensive activity.

But if

paper reduced our need to remember and calculators reduced our need

to calculate, these technologies also reduced our ability to do

such things.

It’s the paradox of automation.

An artificial general intelligence would reduce our need to think.

Such super intelligence would not need a T800 to take the power on Earth.

It just need a bit of patience and irony and we will evolve back to apes.